Findkit updates the search index by running a website crawler eg. a bot which walks site links and extracts the relevant text content straight from the HTML markup. The obvious benefit from this is that you can get content from sites you don’t maintain yourself and easily index multiple-domains to a single index but we often get asked why not provide an index update API for sites you own? Although we might provide such API in future we believe it still would be better to use our crawler for most cases.

Best code is no code!

When using API based index updates you must inherently add custom code which sends the page content to the index when ever the page has been updated. On surface this sounds simple: Just post the content column of the page to the API in a post save handler. You might even find a plugin for your CMS which does this for you.

But there are caveats: The text users want to search for might not all live in the content column. Additional text might come from various other sources: Meta fields, other pages embedded in the page or even data that is fetched from a completely different system. To make them searchable you must add custom code for all of this which can quickly get out of hand. Crawler based solution does not care. It just picks up the text present in the HTML. No coding required. Findkit Crawler can automatically detect relevant text content in most cases which can get you started instantly but for production we recommend marking with the content data attributes or with CSS selectors.

Crawler lags behind?

A common concern is that index lags behind the real content since crawlers are often ran in a fixed schedule. Findkit crawler can crawl the pages on demand every time the page is updated. Here’s a curl example how to trigger a crawl for a given URL:

curl -X POST \

--fail-with-body \

--data '{"mode": "manual", "urls": ["https://example.com/page"]}' \

-H 'Content-Type: application/json' \

-H "Authorization: Bearer $FINDKIT_API_KEY" \

https://api.findkit.com/v1/projects/p68GxRvaA/crawlsand Findkit Crawler will recrawl the given URL instantly. The crawl will be executed asynchronously in the background.

This is something you can put to the post save handler or if you are building a statically generated site you could launch a partial crawl after the build which would crawl the updated pages based on the sitemap last modified timestamps. This is implemented in our WordPress Plugin if you happen to use WordPress. Otherwise check out our REST API docs.

There is an API after all!

What about adding tags, custom fields etc. to the indexed pages? There actually is an API, just not a “REST” API. The transport mechanism is just bit different: You expose a JSON document on the page which is picked up by crawler. It looks like this:

<script id="findkit" type="application/json">

{

"title": "Page title",

"tags": ["custom-tag1", "custom-tag2"]

}

</script>This is called “Findkit Meta Tag“. The WordPress plugin will automatically generate this from the page taxonomies etc.

Private Websites

What about websites that are behind a login screen? You can add custom request headers to be sent with every request the crawler makes and use those to let the crawler pass the login.

A cool use case would be newspaper with a paywall: Let the crawler to see the full page content but require users to login, leave the search public so non-logged in users can find and get glimpses of you content which can act as an marketing tool!

For truly private sites you can make the search fully private with JWT-tokens.

Stateless architecture

When the crawler gets the all the index content directly from the page HTML it means the index is just a derived state from pages. This has some really nice consequences.

Crawls are idempotent eg. they are like the PUT method in REST APIs. If a page HTML has not changed the crawl will always produce the same document into the index. This makes the index content predicable since you can always determine why the index content is what it is.

The search index can be always rebuild. For example if you need to move a site to a different Findkit organization you can just copy the project TOML file over and run the crawl and you’ll get the same result.

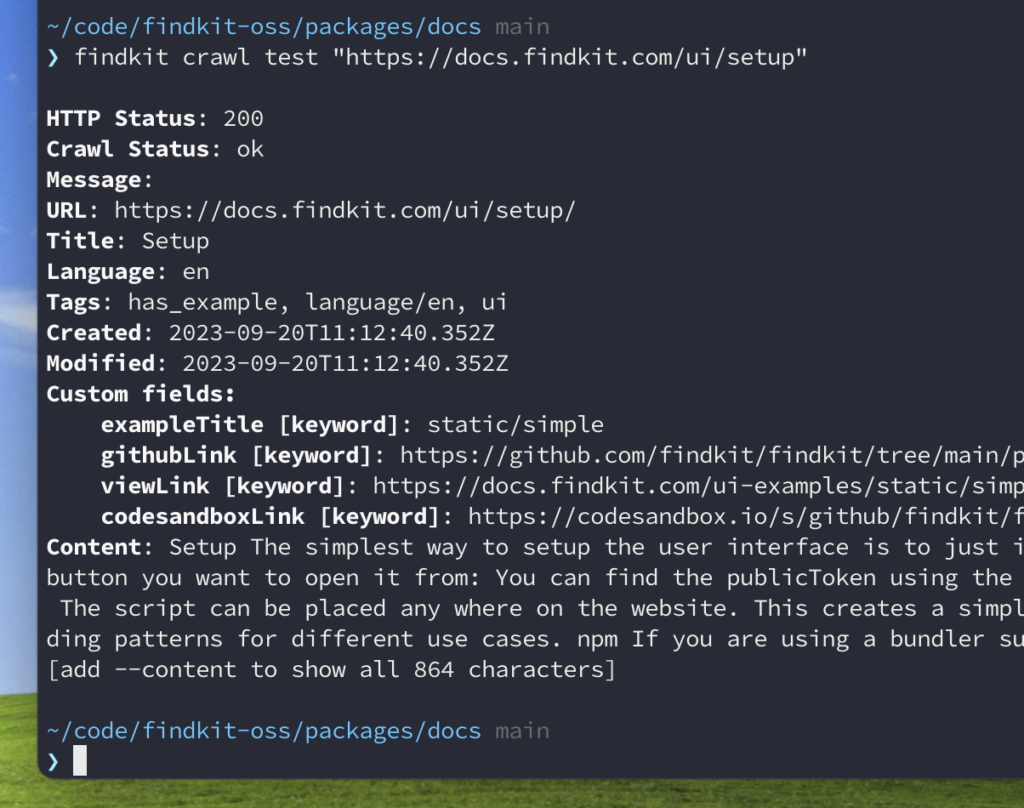

You can always check what index content would be for a given URL with a test crawl without actually updating the index. This really useful when developing content taggers, css selectors etc. The test crawls can be executed using our CLI: